Almost ten months ago, I

mentioned on this

blog

I bought an ARM laptop, which is now my main machine while away from

home a

Lenovo Yoga C630

13Q50. Yes,

yes, I am still not as much

away from home as I used to before, as

this pandemic is still somewhat of a thing, but I do move more.

My main activity in the outside world with my laptop is teaching. I

teach twice a week, and well, having a display for my slides and

for showing examples in the terminal and such is a must. However, as I

said back in August, one of the hardware support issues for this

machine is:

No HDMI support via the USB-C displayport. While I don t expect

to go to conferences or even classes in the next several months,

I hope this can be fixed before I do. It s a potential important

issue for me.

It has sadly not yet been solved While many things have improved

since kernel 5.12 (the first I used), the Device Tree does not yet

hint at where external video might sit.

So, I went to the obvious: Many people carry different kinds of video

adaptors I carry a

slightly bulky one: A RPi3

For two months already (time flies!), I had an ugly contraption where

the RPi3 connected via Ethernet and displayed a VNC client, and my

laptop had a VNC server. Oh, but did I mention My laptop works

so

much better with Wayland than with Xorg that I switched, and am now a

happy user of the

Sway compositor (a drop-in

replacement for the

i3 window manager). It is

built over

WLRoots,

which is a great and (relatively) simple project, but will thankfully

not carry some of Gnome or KDE s ideas not even those I d rather

have. So it took a bit of searching; I was very happy to find

WayVNC, a VNC server for

wlroot-sbased Wayland compositors. I launched a

second Wayland, to

be able to have my main session undisturbed and present only a window

from it.

Only that VNC is slow and laggy, and sometimes awkward. So I kept

searching for something better. And something better is, happily, what

I was finally able to do!

In the laptop, I am using

wf-recorder to grab an area

of the screen and funnel it into a

V4L2 loopback

device (which allows it to

be used as a camera, solving the main issue with grabbing parts of a

Wayland screen):

/usr/bin/wf-recorder -g '0,32 960x540' -t --muxer=v4l2 --codec=rawvideo --pixelformat=yuv420p --file=/dev/video10

(yes, my V4L2Loopback device is set to

/dev/video10). You will note

I m grabbing a 960 540 rectangle, which is the top of my screen

(1920x1080) minus the Waybar. I think I ll increase it to 960 720, as

the projector to which I connect the Raspberry has a 4 3 output.

After this is sent to

/dev/video10, I tell

ffmpeg to send it via

RTP to

the fixed address of the Raspberry:

/usr/bin/ffmpeg -i /dev/video10 -an -f rtp -sdp_file /tmp/video.sdp rtp://10.0.0.100:7000/

Yes, some uglier things happen here. You will note

/tmp/video.sdp

is created in the laptop itself; this file

describes the stream s

metadata so it can be used from the client side. I cheated and copied

it over to the Raspberry, doing an ugly hardcode along the way:

user@raspi:~ $ cat video.sdp

v=0

o=- 0 0 IN IP4 127.0.0.1

s=No Name

c=IN IP4 10.0.0.100

t=0 0

a=tool:libavformat 58.76.100

m=video 7000 RTP/AVP 96

b=AS:200

a=rtpmap:96 MP4V-ES/90000

a=fmtp:96 profile-level-id=1

People familiar with RTP will scold me: How come I m streaming to the

unicast client address? I should do it to an address in the

224.0.0.0 239.0.0.0 range. And it worked, sometimes. I switched over

to 10.0.0.100 because it works, basically always

Finally, upon bootup, I have configured

NoDM to start a session with

the

user user, and dropped the following in my user s

.xsession:

setterm -blank 0 -powersave off -powerdown 0

xset s off

xset -dpms

xset s noblank

mplayer -msglevel all=1 -fs /home/usuario/video.sdp

Anyway, as a result, my students are able to much better follow the

pace of my presentation, and I m able to do some tricks better

(particularly when it requires quick reaction times, as often happens

when dealing with concurrency and such issues).

Oh, and of course in case it s of interest to anybody, knowing that

SD cards are all but reliable in the long run, I wrote a

vmdb2 recipe to build the images.

You can

grab it

here; it

requires some local files to be present to be built some are the

ones I copied over above, and the other ones are surely of no interest

to you (such as my public ssh key or such :-] )

What am I still missing? (read: Can you help me with some ideas? )

- I d prefer having Ethernet-over-USB. I have the USB-C Ethernet

adapter, which powers the RPi and provides a physical link, but I m

sure I could do away with the fugly cable wrapped around the

machine

- Of course, if that happens, I would switch to a much sexier Zero

RPi. I have to check whether the video codec is light enough for a

plain ol Zero (armel) or I have to use the much more powerful Zero

2 I prefer sticking to the lowest possible hardware!

-

Naturally The best would be to just be able to connect my

USB-C-to- HDMI,VGA adapter, that has been sitting idly One

day, I guess

Of course, this is a blog post published to brag about my stuff, but

also to serve me as persistent memory in case I need to recreate

this

Twenty years A seemingly big, very round number, at least for me.

I can recall several very well-known songs mentioning this

timespan:

Twenty years A seemingly big, very round number, at least for me.

I can recall several very well-known songs mentioning this

timespan:

Maybe by sheer chance it was today also that we spent the evening at

Max s house I never worked directly with Max, but we both worked at

Universidad Pedag gica Nacional at the same time back then.

But Of course, a single twentyversary is not enough!

I don t have the exact date, but I guess I might be off by some two or

three months due to other things I remember from back then.

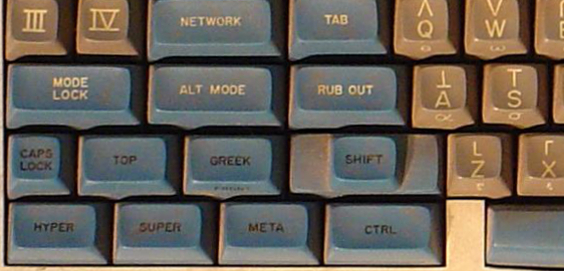

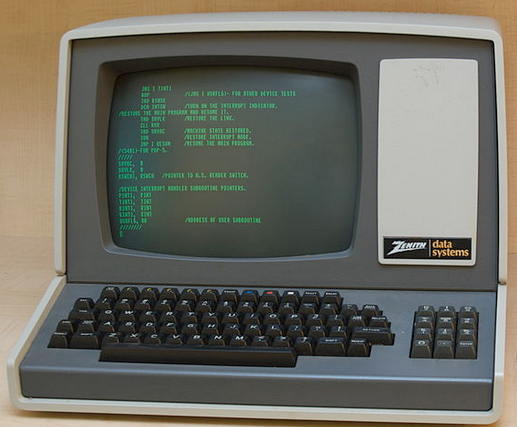

This year, I am forty years old as an Emacs and TeX user!

Back in 1983, on Friday nights, I went with my father to IIMAS (where

I m currently adscribed to as a PhD student, and where he was a

researcher between 1971 and the mid-1990s) and used the computer

one of the two big computers they had in the Institute. And what could

a seven-year-old boy do? Of course use the programs this great

Foonly F2 system had. Emacs and TeX (this is still before LaTeX).

40 years And I still use the same base tools for my daily work, day

in, day out.

Maybe by sheer chance it was today also that we spent the evening at

Max s house I never worked directly with Max, but we both worked at

Universidad Pedag gica Nacional at the same time back then.

But Of course, a single twentyversary is not enough!

I don t have the exact date, but I guess I might be off by some two or

three months due to other things I remember from back then.

This year, I am forty years old as an Emacs and TeX user!

Back in 1983, on Friday nights, I went with my father to IIMAS (where

I m currently adscribed to as a PhD student, and where he was a

researcher between 1971 and the mid-1990s) and used the computer

one of the two big computers they had in the Institute. And what could

a seven-year-old boy do? Of course use the programs this great

Foonly F2 system had. Emacs and TeX (this is still before LaTeX).

40 years And I still use the same base tools for my daily work, day

in, day out.

I m finally at the part of my PhD work where I am tasked with

implementing the protocol I claim improves from the current

situation. I wrote a script to deploy the infrastructure I need for

the experiment, and was not expecting any issues I am not (yet)

familiar with the Go language (in which

I m finally at the part of my PhD work where I am tasked with

implementing the protocol I claim improves from the current

situation. I wrote a script to deploy the infrastructure I need for

the experiment, and was not expecting any issues I am not (yet)

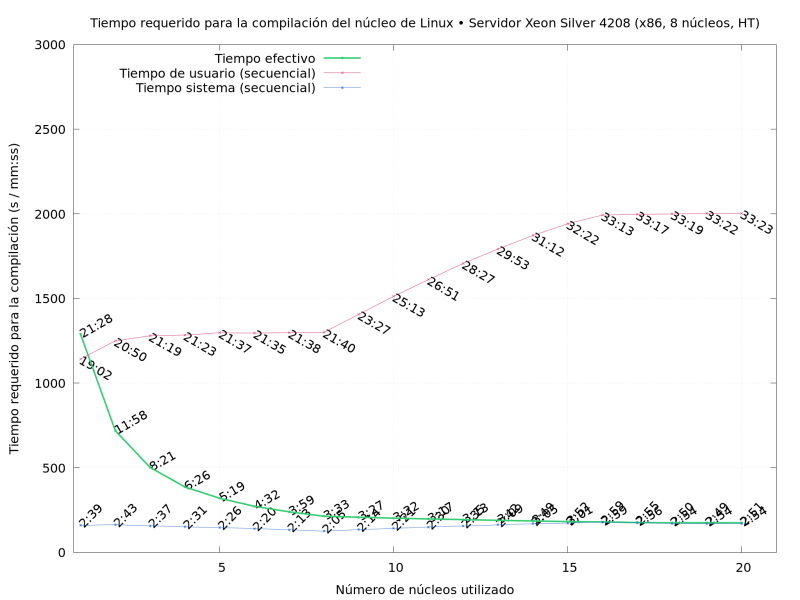

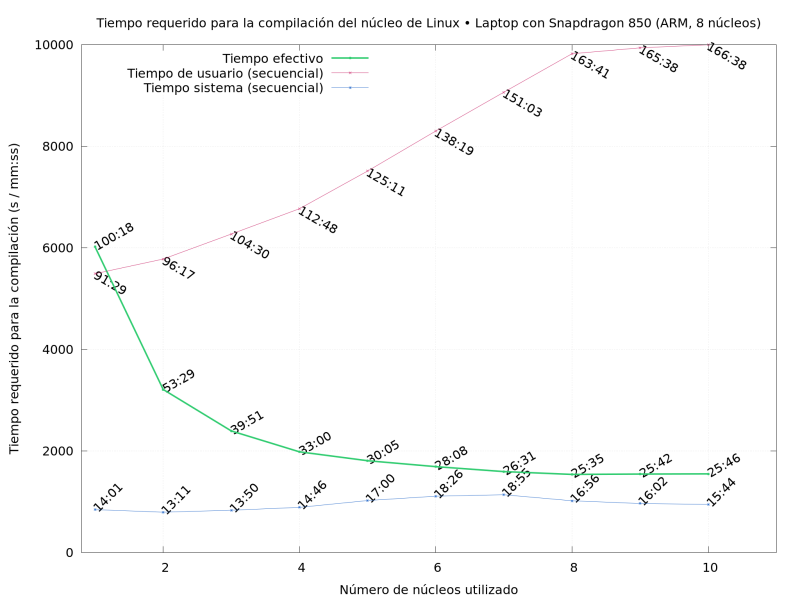

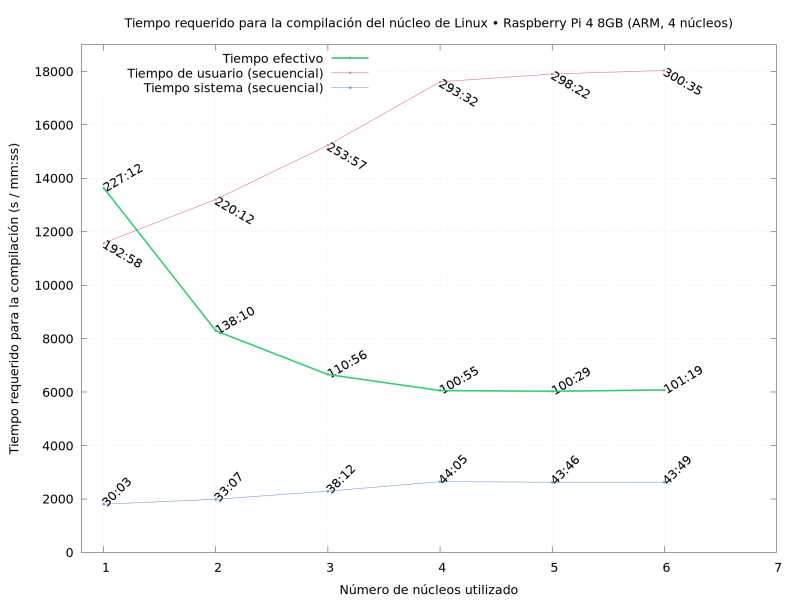

familiar with the Go language (in which  So What did I do? I compiled Linux repeatedly, on several of the

machines I had available, varying the

So What did I do? I compiled Linux repeatedly, on several of the

machines I had available, varying the

Yes, I knew from the very beginning that using this laptop would pose

a challenge to me in many ways, as full hardware support for ARM

laptops are nowhere as easy as for plain boring x86 systems. But the

advantages far outweigh the inconvenience (i.e.

Yes, I knew from the very beginning that using this laptop would pose

a challenge to me in many ways, as full hardware support for ARM

laptops are nowhere as easy as for plain boring x86 systems. But the

advantages far outweigh the inconvenience (i.e.  As a preparation and warm-up for DebConf in September, the Debian

people in India have organized a miniDebConf. Well, I don t want to be

unfair to them They have been regularly organizing miniDebConfs for

over a decade, and while most of the attendees are students local to

this state in South India (the very tip of the country; Tamil Nadu

is the Eastern side, and Kerala, where Kochi is and DebConf will be

held, is the Western side), I have talked with attendees from very

different regions of this country.

This miniDebConf is somewhat similar to similarly-scoped events I have

attended in Latin America: It is mostly an outreach conference, but

it s also a great opportunity for DDs in India to meet in the famous

hallway track.

India is incredibly multicultural. Today at the hotel, I was somewhat

surprised to see people from Kerala trying to read a text written in

Tamil: Not only the languages are different, but the writing systems

also are. From what I read, Tamil script is a bit simpler to Kerala s

Mayalayam, although they come from similar roots.

As a preparation and warm-up for DebConf in September, the Debian

people in India have organized a miniDebConf. Well, I don t want to be

unfair to them They have been regularly organizing miniDebConfs for

over a decade, and while most of the attendees are students local to

this state in South India (the very tip of the country; Tamil Nadu

is the Eastern side, and Kerala, where Kochi is and DebConf will be

held, is the Western side), I have talked with attendees from very

different regions of this country.

This miniDebConf is somewhat similar to similarly-scoped events I have

attended in Latin America: It is mostly an outreach conference, but

it s also a great opportunity for DDs in India to meet in the famous

hallway track.

India is incredibly multicultural. Today at the hotel, I was somewhat

surprised to see people from Kerala trying to read a text written in

Tamil: Not only the languages are different, but the writing systems

also are. From what I read, Tamil script is a bit simpler to Kerala s

Mayalayam, although they come from similar roots.

I was admitted to the first cohort of students of this course (please

note I m not committing him to run free courses ever again! He has

said he would consider doing so, specially to offer a different time

better suited for people in Asia).

I have wanted to learn some Rust for quite some time. About a year

ago, I bought a copy of

I was admitted to the first cohort of students of this course (please

note I m not committing him to run free courses ever again! He has

said he would consider doing so, specially to offer a different time

better suited for people in Asia).

I have wanted to learn some Rust for quite some time. About a year

ago, I bought a copy of  Updates

Back in April I wrote about

Updates

Back in April I wrote about

Years ago, it was customary that some of us stated publicly the way we

think in time of Debian General Resolutions (GRs). And even if we

didn t, vote lists were open (except when voting for people,

i.e. when electing a DPL), so if interested we could understand what

our different peers thought.

This is the first vote, though, where a Debian vote is protected under

Years ago, it was customary that some of us stated publicly the way we

think in time of Debian General Resolutions (GRs). And even if we

didn t, vote lists were open (except when voting for people,

i.e. when electing a DPL), so if interested we could understand what

our different peers thought.

This is the first vote, though, where a Debian vote is protected under

Turns out, I have been suffering from quite bad skin infections for a

couple of years already. Last Friday, I checked in to the hospital,

with an ugly, swollen face (I won t put you through that), and the

hospital staff decided it was in my best interests to trim my

beard. And then some more. And then shave me. I sat in the hospital

for four days, getting soaked (medical term) with antibiotics and

otherstuff, got my recipes for the next few days, and well, I

really hope that s the end of the infections. We shall see!

So, this is the result of the loving and caring work of three

different nurses. Yes, not clean-shaven (I should not trim it

further, as shaving blades are a risk of reinfection).

Anyway I guess the bits of hair you see over the place will not

take too long to become a beard again, even get somewhat

respectable. But I thought some of you would like to see the real me

PS- Thanks to all who have reached out with good wishes. All is fine!

Turns out, I have been suffering from quite bad skin infections for a

couple of years already. Last Friday, I checked in to the hospital,

with an ugly, swollen face (I won t put you through that), and the

hospital staff decided it was in my best interests to trim my

beard. And then some more. And then shave me. I sat in the hospital

for four days, getting soaked (medical term) with antibiotics and

otherstuff, got my recipes for the next few days, and well, I

really hope that s the end of the infections. We shall see!

So, this is the result of the loving and caring work of three

different nurses. Yes, not clean-shaven (I should not trim it

further, as shaving blades are a risk of reinfection).

Anyway I guess the bits of hair you see over the place will not

take too long to become a beard again, even get somewhat

respectable. But I thought some of you would like to see the real me

PS- Thanks to all who have reached out with good wishes. All is fine!

Before anything else: Go visit the

Before anything else: Go visit the

So, LibrePlanet, the FSF s conference, is coming!

I much enjoyed attending this conference in person in March 2018. This

year I submitted a talk again, and it got accepted of course, given

the conference is still 100% online, I doubt I will be able to go 100%

conference-mode (I hope to catch a couple of other talks, but well,

we are all eager to go back to how things were before 2020!)

So, LibrePlanet, the FSF s conference, is coming!

I much enjoyed attending this conference in person in March 2018. This

year I submitted a talk again, and it got accepted of course, given

the conference is still 100% online, I doubt I will be able to go 100%

conference-mode (I hope to catch a couple of other talks, but well,

we are all eager to go back to how things were before 2020!)